June 1, 2017 SOMA: the first human-aware artificial intelligence platform

We’ve been promised a future filled with autonomous vehicles, mixed reality, robotics, and smart homes. However, to deliver on this, our software needs to be able to easily translate our physical presence into the digital space. From autonomous vehicles ‘seeing’ pedestrians as crude bounding boxes to virtual reality experiences still relying on controllers for basic body tracking, it’s evident that our technology is not yet fully ‘human aware.’

Computers need to understand how we’re shaped and the way we move.

Today, we’re announcing the launch of SOMA, the first human-aware artificial intelligence platform that can accurately predict 3D human shape and motion from everyday photos or videos. With SOMA, we look to enable brands and developers to easily capture 3D human shape and motion to power a range of applications.

Built on a statistical model

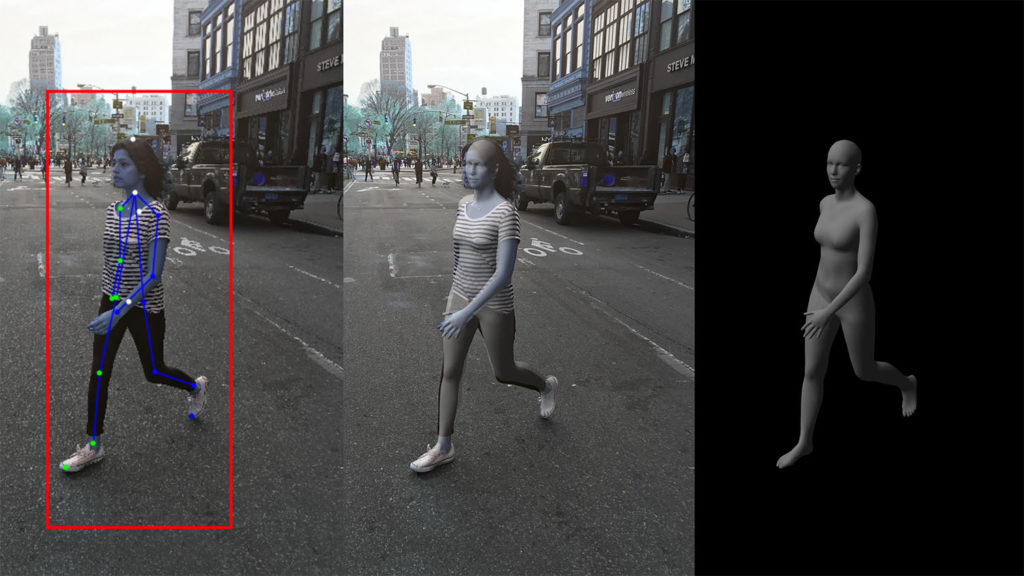

At the core of SOMA is our statistical understanding of 3D human shape that’s trained using thousands of 3D scans and motion data. We use our statistical approach to make a template 3D mesh of the human body that can align to any data source. By first understanding the 3D human body statistically, we can then begin to unravel the complete range of body types and the full constraints of natural human movement.

Unlocking the smartphone with convolutional neural networks

Previously, our statistical approach used emerging 3D scanning or consumer depth sensing technology as primary inputs. By now combining our statistical understanding with convolutional neural networks, we can accurately predict major joints, toes, facial features, and 3D body shape using everyday photos or videos. This unlocks advanced computer vision, 3D modeling, accurate digital body measurements, and markerless motion capture to everyone using the devices we already own: smartphones. → Mosh Mobile App

The future is human aware

We designed SOMA to run on a backend server (and later, natively on mobile devices) to empower any developer to access SOMA and integrate it within their products and services. Over the summer, we’ll be announcing a series of APIs and SDKs to enable businesses and developers to plug into SOMA.

Powering new industries

We see SOMA pioneering a range of applications across a variety of different industries. Today, businesses are already working with SOMA to:

Perfect fit to reinvent apparel

SOMA is connecting consumers based on shape for peer-to-peer social shopping to recommend products they love. Businesses are already looking to enhance their existing recommendation engines by combining rich customer body shape data with past purchases, tech packs, and more. → SOMA for Apparel

Turn actions into super powers

SOMA captures motion without markers. Game developers are exploring how to use SOMA to detect 3D motion and shape referencing user videos to transfer actions into interactive games or virtual environments. Leading developers in mixed reality are looking to easily replicate 3D human motion to power immersive real-time AR and VR experiences. → SOMA for Gaming

Make the roads more people friendly

SOMA empowers leaders in mobility to detect and predict pedestrian actions using conventional cameras. Auto manufacturers are using SOMA to process more detail about pedestrians, power gesture-based in-car entertainment systems, and fuel motion-based predictive analytics to improve safety.

Command devices without controllers

SOMA is learning body commands to enable intelligent hardware or software to understand gestures without controllers or voice prompts. Developers of smart assistants are looking to SOMA to inform their software to learn body gesture controls, actions, and more.

The best controller, communicator, and input device has always been our bodies. SOMA finally enables us to communicate with technology the way we were always meant to, by using our bodies to interact with technology more naturally and freely. Is your technology human aware?